As a machine learning practitioner/student/fan since 2013, I’m always on the lookout for novelties. I first thought that Generative Adversial Networks were technically cool, but still a bit “procedural and predictable”. And then came the next generation of generative models, based on transformers. I was very impressed with GPT-3’s apparent creativity and used OpenAI Codex a lot, through the essential Github Copilot (If you write code and don’t use Copilot yet, I strongly recommend that you give it a try !).

From there was born the great era of limitless visual possibilities and super weird and surprisingly beautiful images: text2image models ! Large text-to-image models achieved a remarkable leap in the evolution of AI, enabling high-quality and diverse synthesis of images from a given text prompt. It started with VQGAN+CLIP, and then we had DALL·E (OpenAI), and then we had (the wonderful) Stable Diffusion (StabilityAI), Imagen (Google) and Midjourney.

After reading on those for a while, and trying out text-to-video, image-to-text, text-to-3D, and image-to-sound, I heard about Dreambooth and wanted to try. So I fine-tuned Stable Diffusion 1.5 using a few pictures of mine and saved the weights for later use (I used code from this Python notebook).

After that, I went here for inspiration on these two websites:

And generated a few hundred pictures. And then I was WOW.

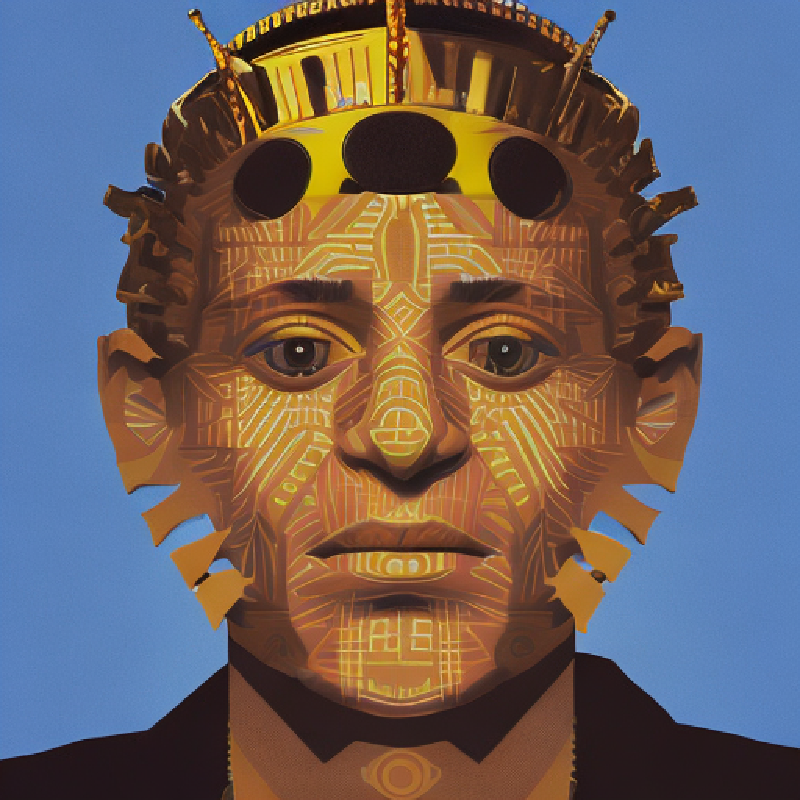

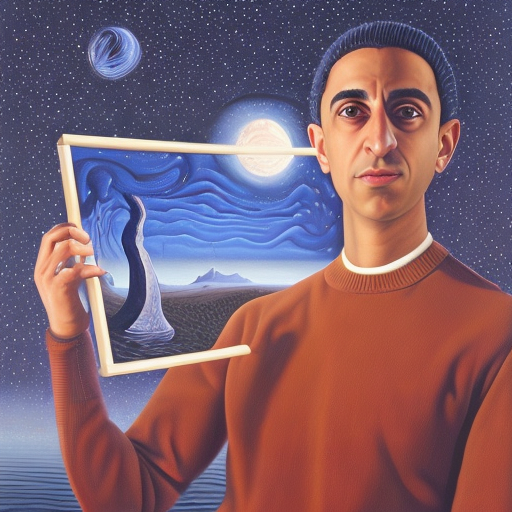

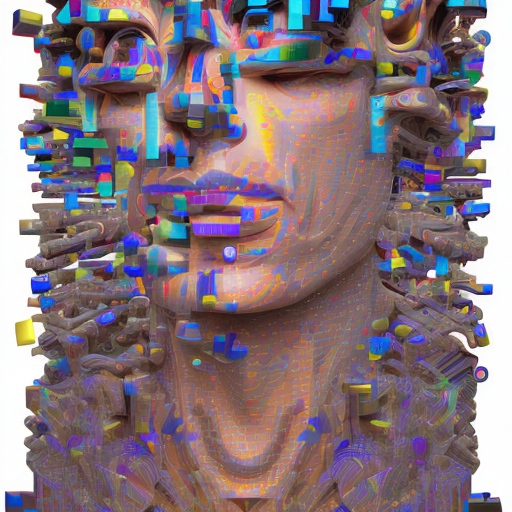

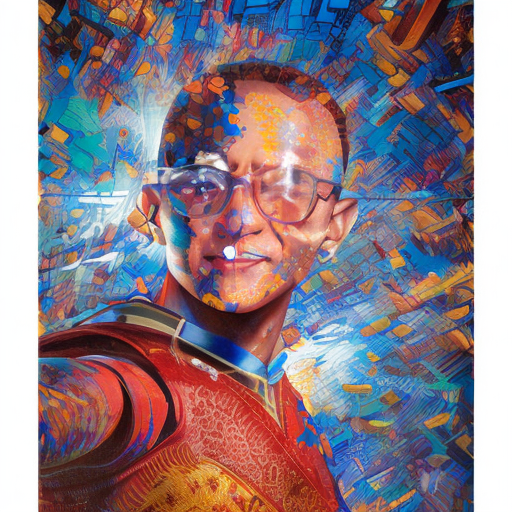

Here’s a quick selection of fun pics:

Amazing isn’t it ? please note that Stable Diffusion 2.0 is on its way too ! That means the text2image saga is not finished yet and we’ll probably see further exciting improvements in efficiency and quality of generated content.

Links:

- Fine-tuning your own Stable Diffusion model: go HERE. You can do all of it for free using Google Colab notebooks. Use a dozen of pictures with your face clear (avoid hats, glasses, etc.) and taken from different angles

- Stable Diffusion’s Official website

- Trying out Stable Diffusion without any code: go HERE

- Ten Years of Image Synthesis

- The Illustrated Stable Diffusion

Thanks for reading ! 😊👋

Note: this article was written with help from GPT-3 on LEX

Anas EL KHALOUI